Classical Cryptography Techniques: Foundations & Methods

Category: Cryptography

Unlocking the Secrets of Classical Cryptography Techniques

If you’re diving into the world of cryptography, cybersecurity, or secret communications, understanding classical cryptography techniques is foundational. Whether you’re a student aiming to grasp the mathematical principles behind historic cipher systems, a cryptography enthusiast curious about the evolution of secret communication, or a cybersecurity professional looking to deepen your knowledge of legacy methods, this guide is tailored for you. You’ve probably encountered modern encryption protocols and quantum-resistant algorithms, but classical cryptography forms the backbone of these developments – from substitution ciphers used by Julius Caesar to the sophisticated mechanical Enigma machine of WWII. This post breaks down those early methods, their mathematical basis, operational mechanics, and their historical significance, all while connecting how insights from these techniques fuel advancements in contemporary cryptology, including the challenges posed by quantum computing. Unlike generic superficial overviews, this article digs deeper into why these techniques mattered, how they worked, and what their limitations taught us about secure communication. Read on to build a strong foundation in classical cryptography that will sharpen your understanding and appreciation of modern cryptographic science and security.

- Unlocking the Secrets of Classical Cryptography Techniques

- Historical Overview of Classical Cryptography

- Mathematical Foundations of Classical Ciphers

- Substitution Ciphers: Classic Techniques and Their Cryptographic Impact

- Transposition Ciphers: Classical Techniques and Their Operation Mechanisms

- Polyalphabetic Ciphers and the Vigenère Cipher

- Mechanical Cryptography Devices: Revolutionizing Secret Communications

- Cryptanalysis Techniques for Classical Ciphers

- Impact of Classical Cryptography on Modern Cryptology

- The Quantum Threat and Classical Cryptography

Historical Overview of Classical Cryptography

The origins of classical cryptography trace back thousands of years, rooted in the fundamental human need for secret communication in warfare, diplomacy, and governance. Early civilizations like the Egyptians and Mesopotamians employed rudimentary forms of encryption, primarily through symbol substitution and transposition, to protect sensitive information. One of the earliest documented cipher systems is the Caesar cipher, attributed to Julius Caesar around 58 BCE, which involved shifting letters in the alphabet to encode messages. This method exemplified the balance between simplicity and functionality in ancient times.

Throughout history, several key figures shaped the evolution of cryptography. Al-Kindi, a 9th-century Arab polymath, pioneered frequency analysis, enabling the systematic breaking of substitution ciphers—marking a major advancement in cryptanalysis. Later, during the Renaissance, figures like Giovanni Trithemius developed the tabula recta, which laid the groundwork for polyalphabetic ciphers such as the Vigenère cipher. These innovations emerged within complex societal contexts where the stakes of communication—ranging from battlefield strategies to espionage—demanded increasingly sophisticated methods for confidentiality and authentication. Understanding this rich historical backdrop illuminates not only the mechanics of classical techniques but also the strategic imperatives that drove cryptographic innovation across centuries.

Image courtesy of cottonbro studio

Mathematical Foundations of Classical Ciphers

At the core of classical cryptography lie several key mathematical principles that underpin how messages were encoded and decoded. Understanding these principles not only reveals why classical ciphers functioned but also highlights their vulnerabilities—knowledge that shaped modern cryptanalysis.

Modular Arithmetic: The Backbone of Substitution Ciphers

Many classical ciphers, including the famous Caesar cipher and its variations, rely heavily on modular arithmetic—a system of arithmetic for integers where numbers "wrap around" upon reaching a certain value, known as the modulus. For example, when working with the 26 letters of the English alphabet, arithmetic operations are performed modulo 26. This means that if a calculation exceeds 25 (indexed 0–25 for letters), it loops back to the beginning of the alphabet.

Modular arithmetic facilitates:

- Letter shifting: Encoding by shifting letters forward or backward a fixed number of positions.

- Mathematical representation: Treating letters as numbers allows simpler computational implementations of encryption and decryption.

A basic Caesar cipher encrypts a message ( P ) by applying the formula:

[ C = (P + k) \mod 26 ]

where ( C ) is the ciphertext letter, and ( k ) is the shift key.

Frequency Analysis: The Key to Breaking Monoalphabetic Ciphers

The principle of frequency analysis, introduced by Al-Kindi, exploits statistical regularities in natural language to break classical substitution ciphers. Since each language has characteristic letter frequencies (for example, 'E' is the most common letter in English), an attacker can analyze the ciphertext letter distribution to infer probable mappings to plaintext letters.

Key elements include:

- Frequency tables: Comparing ciphertext letter frequencies with known language frequencies.

- Pattern recognition: Identifying common words and repeated letter sequences to refine guesses.

- Limitations on security: Pure substitution ciphers are vulnerable because they maintain letter frequency patterns.

Permutations and Transposition Ciphers

Classical transposition ciphers encode messages by rearranging letters based on a predefined system, rather than substituting them. Here, the concept of permutations—mathematical rearrangements of elements—becomes fundamental.

Important concepts involve:

- Permutation groups: The set of all possible rearrangements for a given number of elements forms a group under composition, whose structure determines the encryption complexity.

- Columnar transposition: Writing the plaintext into rows and reading out columns in a permuted order.

- Security through complexity: The number of possible permutations grows factorially (n!), providing a combinatorial explosion that increases resistance to brute-force attacks.

Together, modular arithmetic, frequency analysis, and permutations formulate the mathematical landscape in which classical ciphers operate. Recognizing these core principles allows a deeper appreciation of how early cryptographers balanced simplicity with security, and why these systems became stepping stones toward modern cryptographic algorithms.

Image courtesy of cottonbro studio

Substitution Ciphers: Classic Techniques and Their Cryptographic Impact

Substitution ciphers form the foundation of classical encryption methods by transforming plaintext characters into ciphertext based on systematic letter replacements. These ciphers are among the earliest and most intuitive means for secret communication. Understanding their varied techniques—such as the Caesar cipher, monoalphabetic substitution, and homophonic substitution—sheds light on both their operational simplicity and cryptographic limitations.

Caesar Cipher: The Original Shift Cipher

The Caesar cipher is arguably the simplest substitution technique, historically attributed to Julius Caesar. It shifts each letter in the plaintext by a fixed number of positions down the alphabet. For example, with a shift of 3, 'A' becomes 'D', 'B' becomes 'E', and so forth, wrapping around at the end of the alphabet using modular arithmetic.

Strengths:

- Extremely easy to implement and understand.

- Offers basic confidentiality suitable for low-stakes communication.

Weaknesses:

- Very low security due to the limited key space of only 25 possible shifts.

- Vulnerable to brute-force attacks and frequency analysis because letter frequencies are preserved.

Monoalphabetic Ciphers: Beyond Simple Shifts

Expanding on Caesar’s approach, monoalphabetic substitution ciphers replace each plaintext letter with a unique ciphertext letter based on a scrambled fixed alphabet. This method uses a substitution alphabet as a key, providing 26! (approximately (4 \times 10^{26})) possible keys, a massive increase over the Caesar cipher.

Advantages:

- Greater key space drastically complicates brute-force attempts.

- Simple to deploy with pen and paper.

Limitations:

- Despite the large key space, monoalphabetic ciphers are still susceptible to frequency analysis since each letter maps to exactly one substitute, preserving frequency patterns.

- Cracking is possible by analyzing ciphertext letter distributions and common linguistic structures.

Homophonic Substitution: Mitigating Frequency Analysis

To counteract frequency analysis, homophonic substitution ciphers assign multiple ciphertext symbols to a single plaintext letter, especially common letters like 'E' or 'T'. This diffuses letter frequency patterns by distributing occurrences over a wider set of ciphertext characters.

How it works:

- High-frequency plaintext letters correspond to several potential ciphertext symbols to “flatten” frequency distribution.

- Low-frequency letters may have fewer or even just one corresponding ciphertext symbol.

Benefits:

- Reduces susceptibility to traditional frequency analysis.

- More secure than simple monoalphabetic substitutions without adding significant complexity.

Drawbacks:

- Requires a larger ciphertext alphabet (symbols, numbers, or groups), increasing the complexity of cipher creation and communication.

- Still vulnerable to advanced statistical techniques and known-plaintext attacks.

Substitution ciphers, from the straightforward Caesar cipher to the more sophisticated homophonic varieties, illustrate an evolutionary path aiming to balance ease of use and resistance to cryptanalysis. While their inherent vulnerabilities limited their long-term security, studying these techniques provides vital insights into the mathematical and linguistic challenges early cryptographers faced. These lessons underpin modern encryption design principles and illuminate why frequency analysis remains a fundamental cryptanalytic tool despite advances in algorithmic complexity.

Image courtesy of cottonbro studio

Transposition Ciphers: Classical Techniques and Their Operation Mechanisms

Transposition ciphers represent a fundamental category of classical cryptographic techniques that secure messages by rearranging the order of characters rather than substituting them. Unlike substitution ciphers that replace letters with other letters or symbols, transposition ciphers maintain the original plaintext characters but shuffle their positions according to a defined algorithm or key. This approach preserves the frequency of individual letters, making them resistant to naive frequency analysis while relying on the complexity of permutations to ensure confidentiality.

Rail Fence Cipher: Zigzag Permutation

One of the simplest classical transposition ciphers is the rail fence cipher, which arranges plaintext characters in a zigzag pattern across multiple "rails" or rows. To encrypt, the message is written diagonally across rails and then read off row by row to create the ciphertext. For example, encoding the phrase "CLASSICAL CIPHERS" with two rails would look like this:

C S I A I P E

L A C L C H R S

Reading row-wise produces the ciphertext. Decryption reverses this process by reconstructing the zigzag pattern using the known number of rails. Though straightforward, the rail fence cipher offers only minimal security due to its predictability and small key space (the number of rails), making exhaustive trial feasible for attackers.

Columnar Transposition: Matrix-Based Rearrangement

The columnar transposition cipher significantly enhances complexity by writing the plaintext in rows within a matrix defined by a keyword that determines the column order. Encryption involves:

- Writing the plaintext row-wise into a grid beneath the keyword.

- Permuting columns based on the alphabetical order of keyword letters.

- Reading columns vertically in the new order to generate ciphertext.

For example, using keyword CRYPTO to encode plaintext, columns are reordered based on the alphabetical ranking of each letter in the keyword ('C' = 1, 'O' = 6, etc.), drastically changing the letter sequence in ciphertext.

This method exploits the mathematical principle of permutations, where the number of possible column arrangements is factorial in the length of the keyword (n!). Larger keywords exponentially increase encryption complexity. While columnar transposition resists simple frequency analysis since letter identities remain intact but scrambled, repeated patterns and known plaintext can still weaken its security.

Security and Limitations of Classical Transposition Ciphers

Despite their structural elegance, transposition ciphers share key vulnerabilities:

- Preservation of letter frequencies: Since letters remain unchanged, frequency analysis combined with pattern detection can assist attackers.

- Susceptibility to anagramming: Skilled cryptanalysts can attempt reordering ciphertext permutations to reveal plaintext.

- Key reuse risks: Using the same permutation keys repeatedly reduces security over time.

Nevertheless, transposition ciphers laid critical groundwork for modern permutation-based cryptographic algorithms and illustrate how mathematical permutation theory intertwines with encryption mechanics. Studying their inner workings enhances understanding of both historical methods and the mathematical landscape underlying contemporary secure communication—particularly in preparing for new challenges posed by quantum cryptanalysis.

Image courtesy of cottonbro studio

Polyalphabetic Ciphers and the Vigenère Cipher

One of the critical limitations of monoalphabetic substitution ciphers is their vulnerability to frequency analysis, since each plaintext letter maps to a single ciphertext symbol, preserving language letter frequency patterns. To address this weakness, classical cryptographers developed polyalphabetic ciphers, which employ multiple substitution alphabets to encrypt a message, thereby obfuscating frequency patterns and significantly enhancing security over monoalphabetic methods.

Understanding Polyalphabetic Substitution

In polyalphabetic ciphers, the encryption process uses a series of different substitution alphabets, cycling through them based on a keyword or key phrase. Each letter in the plaintext is encrypted with a different cipher alphabet depending on its position and the corresponding key letter. This approach breaks the one-to-one correspondence between plaintext and ciphertext letters found in monoalphabetic ciphers, diffusing the frequency distribution which makes direct frequency analysis far less effective.

Key features include:

- Multiple cipher alphabets: Rather than a single fixed substitution, multiple alphabets rotate during encryption.

- Keyword-driven variation: The key determines which alphabet applies to each letter, enabling a dynamic substitution process.

- Increased key complexity: The effective keyspace grows exponentially relative to the keyword length, adding layers of complexity for cryptanalysis.

The Vigenère Cipher: A Landmark in Polyalphabetic Encryption

The Vigenère cipher, often dubbed “le chiffre indéchiffrable” (the indecipherable cipher) by early cryptographers, is the most famous classical example of a polyalphabetic cipher. Originally conceptualized by Giovan Battista Bellaso and later misattributed to Blaise de Vigenère, this cipher significantly improved security over simpler substitution techniques through its systematic use of the tabula recta—a 26x26 matrix of alphabetical Caesar shifts.

How the Vigenère Cipher Works

- Key Generation: A keyword is selected and repeated to match the length of the plaintext.

- Encryption: Each plaintext letter is shifted along the alphabet by the amount indicated by the corresponding key letter’s position. Mathematically, encryption applies modular addition:

[ C_i = (P_i + K_i) \mod 26 ]

where (P_i) and (K_i) represent numerical indices for plaintext and key letters respectively, and (C_i) is the ciphertext letter.

- Decryption: The process reverses using modular subtraction:

[ P_i = (C_i - K_i) \mod 26 ]

This method creates a polyalphabetic substitution, cycling through multiple Caesar shifts determined by the key.

Security Advantages Over Monoalphabetic Ciphers

- Frequency Masking: Because the same plaintext letter can encrypt differently depending on the key position, letter frequency patterns are blurred, thwarting standard frequency analysis.

- Expanded Key Space: The security depends heavily on the length and randomness of the keyword. Longer, non-repetitive keys approach near one-time pad security.

- Cryptanalytic Challenges: Traditional cryptanalysis requires more sophisticated techniques like the Kasiski examination or Friedman test to estimate key length and break the cipher.

However, the Vigenère cipher is not impervious. When keywords are short or reused extensively, patterns emerge, allowing skilled cryptanalysts to break the code. Despite these limitations, the Vigenère cipher was a major leap forward and remained widely used for centuries until the advent of more advanced cryptanalytic methods and mechanized ciphers in the 20th century.

By leveraging polyalphabetic substitution with dynamic keyed shifts, the Vigenère cipher advanced classical cryptography from vulnerable monoalphabetic schemes into more robust territory. It elegantly demonstrates how mathematical concepts like modular arithmetic and the tabula recta matrix contribute to enhancing secrecy. Studying the Vigenère cipher's strengths and weaknesses not only enriches our historical perspective but also deepens our understanding of polyalphabetic encryption principles that influence many modern cryptographic algorithms.

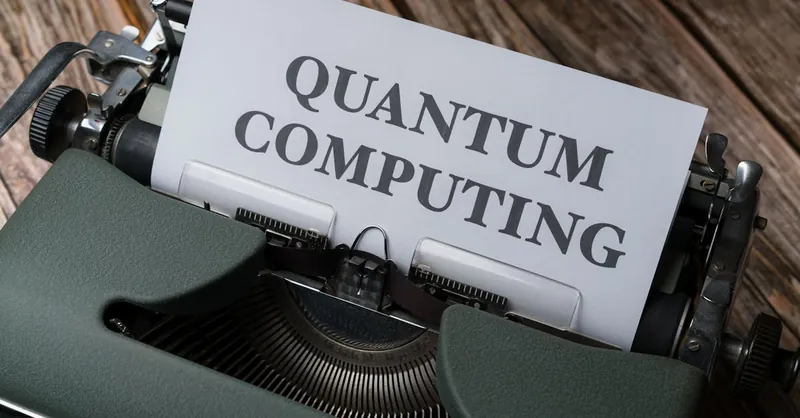

Image courtesy of Markus Winkler

Mechanical Cryptography Devices: Revolutionizing Secret Communications

While classical ciphers laid the theoretical foundations of encryption, the evolution of mechanical cryptography devices marked a transformative leap in the practical implementation of secure communication. These machines automated complex cipher operations, allowing for faster, more reliable encryption and decryption—especially critical during wartime and diplomatic exchanges when speed and accuracy could change the course of history.

The Jefferson Disk: Early Mechanical Innovation

Invented by Thomas Jefferson in the late 18th century, the Jefferson disk (also known as the Bazeries Cylinder) was a pioneering device designed to enhance classical substitution and transposition techniques. It consisted of a set of rotating disks—each inscribed with a scrambled alphabet—mounted on a spindle. By aligning the disks according to a secret key sequence, users could encode messages through letter transposition and substitution mechanically.

The Jefferson disk offered:

- Increased key complexity: By permuting disk order and rotations, it generated a vast number of cipher variants.

- Practical usability: Mechanization reduced human error and increased encryption speed compared to manual ciphers.

- Design influence: It inspired later electromechanical cipher machines by demonstrating how mechanical rotation and alignment could effect complex permutations.

The Enigma Machine: The Icon of Mechanical Cryptography

The most famous mechanical cryptography device, the Enigma machine, epitomized the peak of classical cryptanalysis challenges and mechanical encryption sophistication in the early 20th century. Used extensively by Germany during World War II, the Enigma employed a series of rotating rotors, plugboard settings, and a reflector to perform polyalphabetic substitution with a dynamically changing cipher alphabet.

Key aspects of the Enigma’s mechanics include:

- Rotors: Each rotor implemented a substitution cipher and rotated with every keystroke, changing the cipher alphabet continuously.

- Plugboard (Steckerbrett): Allowed manual swapping of letter pairs, exponentially increasing the keyspace and complexity.

- Reflector: Permuted signals back through the rotors, enabling the same machine settings for both encryption and decryption.

The machine’s immense keyspace—estimated at around (10^{114}) possible configurations—made breaking Enigma ciphers a formidable task. However, Allied cryptanalysts, leveraging mathematical insight, operational intelligence, and early computing devices like the Bombe, eventually cracked Enigma-encrypted communications, significantly impacting the war’s outcome.

Impact on Cryptographic Science and Modern Encryption

Mechanical cryptography devices like the Jefferson disk and Enigma revolutionized secret communications by:

- Automating complex substitution and permutation processes, thus scaling cipher operations beyond manual limits.

- Introducing dynamic polyalphabetic substitution with rapid key changes, significantly increasing cryptographic strength.

- Highlighting practical challenges in key management and operational security, which remain critical in modern cryptography.

- Spurring advancements in computational cryptanalysis, as breaking mechanical ciphers demanded innovative mathematical and engineering solutions.

These machines bridged classical cryptographic theory and modern computer-based encryption, underscoring how mechanical ingenuity and mathematical principles intertwined to safeguard—and challenge—the secrecy of communications. Their legacy persists in contemporary cryptology, where the drive to balance algorithmic complexity, operational efficiency, and security continues to evolve against the emergent threat of quantum computing.

Image courtesy of Markus Winkler

Cryptanalysis Techniques for Classical Ciphers

Cryptanalysis—the art and science of deciphering encrypted messages without prior knowledge of the key—played a crucial role in exposing the vulnerabilities of classical ciphers. Early cryptanalysts developed systematic methods that exploited inherent weaknesses in classical encryption schemes, primarily through statistical and pattern-based approaches. Three cornerstone techniques illustrate how cryptanalysis evolved to challenge and eventually break many classical ciphers: frequency analysis, the Kasiski examination, and other decryption strategies like the index of coincidence.

Frequency Analysis: Exploiting Language Patterns

Developed by the 9th-century polymath Al-Kindi, frequency analysis remains one of the most powerful tools for attacking substitution ciphers. This method hinges on the predictable distribution of letters and common patterns in natural languages—for instance, the letter 'E' appearing more frequently in English text than all others. By analyzing the ciphertext's letter frequency and comparing it to known language statistics, cryptanalysts could infer plausible substitutions and gradually reconstruct the original plaintext.

Key aspects include:

- Identification of the most frequent ciphertext letters likely corresponding to common plaintext letters.

- Recognition of frequently occurring digraphs (two-letter sequences) and trigraphs to refine guesses.

- Adaptation to homophonic ciphers by distributing letter frequency over multiple ciphertext symbols, though still vulnerable to advanced statistical scrutiny.

Kasiski Examination: Unveiling Polyalphabetic Keys

While frequency analysis falters against polyalphabetic ciphers like the Vigenère cipher due to their shifting alphabets, the Kasiski examination, developed by Friedrich Kasiski in the 19th century, provided a breakthrough. This technique focuses on locating repeated ciphertext sequences, which likely correspond to repeated plaintext segments encrypted with the same key portion.

The Kasiski method involves:

- Scanning ciphertext for recurring patterns of letters.

- Measuring the distances between these repetitions.

- Calculating the greatest common divisors (GCDs) of these distances to hypothesize the key length.

Once the key length is estimated, cryptanalysts can segment the ciphertext into multiple Caesar cipher subsets and apply frequency analysis to each, effectively reducing polyalphabetic ciphers to manageable monoalphabetic problems.

Additional Decryption Strategies: Index of Coincidence and Beyond

Building on Kasiski’s work, the index of coincidence (IC)—introduced by William Friedman—quantifies the likelihood that two randomly chosen letters from a text are identical. This statistical measure helps estimate the key length of polyalphabetic ciphers and detect deviations from random letter distributions.

Other classical cryptanalytic strategies include:

- Known-plaintext attacks, leveraging partial knowledge of the plaintext to uncover cipher keys.

- Crib-dragging, using guessed or known segments (cribs) to align and test key guesses.

- Anagramming and pattern searching for transposition ciphers by rearranging ciphertext letters to detect intelligible phrases.

Together, these cryptanalysis techniques expose fundamental weaknesses in classical ciphers by harnessing language statistics, mathematical insights, and methodical pattern detection. Understanding these strategies not only illuminates historical cryptographic breakthroughs but also underscores enduring principles that influence modern cryptanalysis, including the challenges quantum computing introduces to encryption security.

Image courtesy of cottonbro studio

Impact of Classical Cryptography on Modern Cryptology

Classical cryptography techniques laid the essential groundwork that shapes modern cryptology by influencing the design, analysis, and implementation of contemporary encryption algorithms. The mathematical principles, such as modular arithmetic, permutation theory, and frequency analysis, discovered and refined through classical ciphers directly inform how modern cryptosystems achieve confidentiality, integrity, and authentication. For example, the concept of substitution and polyalphabetic shifts found in classical ciphers like the Vigenère cipher inspired modern symmetric encryption algorithms that rely on complex substitution-permutation networks to enhance security.

However, classical systems revealed inherent limitations that catalyzed the evolution toward more sophisticated cryptographic frameworks:

- Limited Key Space and Predictability: Many classical ciphers suffered from small key spaces (e.g., Caesar cipher’s 25 shifts) or predictable patterns allowing straightforward cryptanalysis through brute-force or frequency analysis.

- Deterministic Mappings: Monoalphabetic schemes map single letters to unique substitutes, preserving statistical language characteristics that attackers exploit.

- Static Keys and Lack of Dynamic Complexity: Unlike modern algorithms, most classical ciphers use static keys without the high entropy and key expansion mechanisms that safeguard contemporary ciphers.

- Inability to Withstand Computational Advances: Advancements in mathematics and computing power rendered many classical ciphers obsolete, making way for algorithms with stronger cryptographic primitives.

These constraints led cryptographers to develop complex cryptographic paradigms such as:

- Block and stream ciphers with dynamic substitution and permutation layers.

- Public-key cryptography enabling secure communication without prior key exchange.

- Mathematically rigorous frameworks like trapdoor functions and probabilistic encryption.

Moreover, the challenges observed in classical cryptanalysis directly influenced the rise of computational cryptanalysis and the implementation of mechanisms that achieve provable security under well-defined hardness assumptions. In the era of quantum technology, understanding these classical foundations is more critical than ever, as the cryptographic community explores how to evolve beyond the vulnerabilities exposed by quantum algorithms like Shor’s and Grover’s.

In essence, classical cryptography provides not only a historical perspective but also a conceptual roadmap, highlighting both successes and vulnerabilities that deeply inform the design and assessment of today’s encryption standards and ongoing research in quantum-resistant cryptology.

Image courtesy of cottonbro studio

The Quantum Threat and Classical Cryptography

Emerging quantum technologies present a profound challenge to classical cryptography methods that have long secured digital communications. Algorithms such as Shor’s algorithm threaten to efficiently solve problems—like integer factorization and discrete logarithms—that underpin many widely used classical encryption schemes, including RSA, ECC, and Diffie-Hellman key exchange. This capability renders classical public-key cryptosystems fundamentally vulnerable once sufficiently powerful quantum computers become practical.

Key reasons why quantum computing jeopardizes classical cryptography include:

- Exponential Speedup in Factoring and Discrete Logarithms: Quantum algorithms can break public-key systems by rapidly solving problems considered infeasible for classical computers.

- Grover’s Algorithm Impact on Symmetric Ciphers: Although symmetric algorithms like AES are more resistant, Grover’s algorithm effectively halves their key strength, prompting the need for longer keys.

- Inadequacy of Classical Hard Problems Against Quantum Attacks: Many classical security assumptions no longer hold in the quantum model, invalidating the long-term trust in traditional cryptographic primitives.

Given this looming threat, the cryptographic community is actively pursuing quantum-resistant cryptography—also called post-quantum cryptography (PQC)—which designs algorithms based on mathematical problems believed to withstand quantum attacks. These include lattice-based, hash-based, code-based, and multivariate polynomial cryptosystems. Transitioning to PQC is critical to ensure the confidentiality and integrity of both existing data and future communications in a post-quantum world.

The importance of beginning this transition now cannot be overstated:

- Data Harvesting Risks: Adversaries may record encrypted data today and decrypt it later once quantum capabilities emerge.

- Long-Term Security Needs: Sensitive data with extended confidentiality requirements demands forward-looking cryptographic safeguards.

- Interoperability and Standardization: Early adoption enables smoother migration and testing of quantum-safe protocols within existing infrastructure.

In sum, classical cryptography—while foundational in the history and development of secure communication—faces obsolescence in the face of quantum advancements. Understanding this threat emphasizes the urgency of evolving toward quantum-resistant cryptography to safeguard privacy and data security in the quantum era.

Image courtesy of cottonbro studio