The Mechanics of Hashing Algorithms Explained

Category: Cryptography

Unlocking the Secrets Behind Hashing Algorithms

If you’ve ever wondered how data integrity is maintained, passwords are securely stored, or digital signatures remain trustworthy, understanding the mechanics of hashing algorithms is essential. Whether you're a cybersecurity professional verifying system security, a cryptography enthusiast unraveling the math behind cryptographic functions, or a student diving into secret communications, this post is designed for you. You've likely landed here aiming to deepen your knowledge of how these algorithms work under the hood—beyond just what a hash function is, to how it operates at a technical level including its mathematical structure, security properties, and evolving role with emerging quantum technologies.

Many resources provide shallow definitions or gloss over the intricate inner workings of hashing, leaving you wanting a clearer, more structured explanation that ties historical foundations to modern applications. This post stands apart by delivering a comprehensive walkthrough of hashing algorithm mechanics, highlighting their design principles, common constructions, critical security aspects, and the quantum implications reshaping cryptanalysis. By bridging theory with practical examples and use cases, you'll gain a richer, actionable understanding to strengthen your grasp on cryptographic integrity assurances.

Read on to explore detailed sections covering everything from basic concepts and popular algorithm types to collision resistance and post-quantum prospects. This guide is your roadmap to mastering hashing algorithms and appreciating their vital role in today’s security landscape.

- Unlocking the Secrets Behind Hashing Algorithms

- Fundamentals of Hashing: Definition, Purpose, and Core Properties

- Mathematical Foundations Behind Hash Functions

- History and Evolution of Hashing Algorithms

- Detailed Mechanics of Popular Hashing Algorithms

- Properties Ensuring Security: Collision Resistance, Pre-image Resistance, and Second Pre-image Resistance

- Common Attacks on Hash Functions and Their Mitigations

- Hashing in Digital Signatures and Message Authentication Codes (MACs)

- Impact of Quantum Computing on Hashing Algorithms

- Practical Use Cases: Password Hashing, Data Integrity Verification, and Blockchain

- Future Trends and Emerging Research in Hashing Technologies

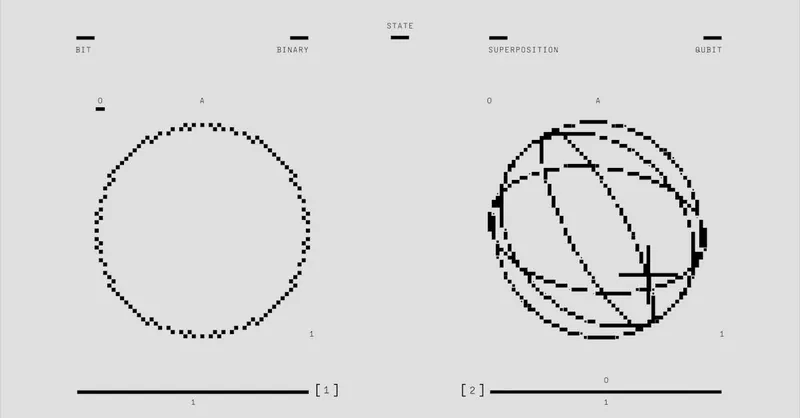

Fundamentals of Hashing: Definition, Purpose, and Core Properties

At its core, a hashing function is a mathematical algorithm that transforms input data of arbitrary size into a fixed-size string of characters, typically represented as a hash value or digest. This seemingly simple process underpins many critical aspects of modern cryptography and data security. The primary purpose of hashing is to ensure data integrity, authenticate information, and securely store sensitive data like passwords without revealing the original content. By converting data into a unique and consistent digest, hashing functions help detect unauthorized changes, verify authenticity, and enable tamper-proof digital communication.

To fully grasp the significance of hashing in cryptography, it’s essential to understand its core properties:

- Determinism: The same input will always produce the exact same output hash. This consistency is vital for verifying data integrity across multiple instances or systems.

- Fixed Output Size: Regardless of input length, the output hash has a predetermined size (e.g., 256 bits for SHA-256), which simplifies storage, comparison, and cryptographic operations.

- Pre-image Resistance: Given a hash output, it is computationally infeasible to reverse-engineer or deduce the original input data, ensuring one-way security critical for password protection and digital signatures.

- Collision Resistance: It is extremely unlikely that two different inputs will produce the same hash output, preventing malicious actors from substituting one message for another without detection.

- Avalanche Effect: Small changes to input produce significantly different hash outputs, enhancing sensitivity and security by making patterns or relationships between inputs and hashes nearly impossible to exploit.

These fundamental characteristics make hashing functions indispensable tools in securing digital data and communications. From verifying file integrity and generating digital fingerprints to enabling blockchain consensus and secure key derivation, their role is both foundational and far-reaching in cryptographic protocols. Understanding these principles sets the stage for deeper exploration of specific algorithms, their mathematical underpinnings, and the emerging quantum challenges they face.

Image courtesy of Miguel Á. Padriñán

Mathematical Foundations Behind Hash Functions

At the heart of every robust hashing algorithm lie fundamental mathematical concepts that ensure security, efficiency, and collision resistance. Understanding these core principles not only demystifies how hash functions operate but also reveals why they're reliable tools for cryptography and data integrity. Three foundational elements form the backbone of many widely used hashing schemes: modular arithmetic, bitwise operations, and compression functions.

Modular Arithmetic: The Clockwork of Hashing

Modular arithmetic, often described as "clock arithmetic," involves performing calculations where numbers wrap around upon reaching a certain value called the modulus. In hashing algorithms like SHA-256 and MD5, modular addition ensures that intermediate values stay within fixed-size bounds, typically corresponding to the word size of the processor (e.g., 32 or 64 bits). This cyclical property provides:

- Fixed-length outputs: By constraining calculations within a modulus, hash outputs maintain consistent size.

- Efficient diffusion: Wrap-around arithmetic helps mixes bits thoroughly, amplifying the avalanche effect.

- Deterministic behavior: Operations mod n guarantee repeatable results, essential for the deterministic nature of hash functions.

Bitwise Operations: Manipulating Data at the Lowest Level

Hash functions rely heavily on bitwise operations—including AND, OR, XOR, NOT, as well as bit shifts and rotations—to scramble input data effectively. These operations are computationally inexpensive and ideal for implementing in hardware or software, enabling fast yet secure mixing of bits. Key advantages include:

- Creating non-linearity that strengthens collision resistance and pre-image resistance.

- Enhancing avalanche properties by changing output bits dramatically even with single-bit input modifications.

- Enabling complex transformations that make reverse engineering or forensic analysis significantly difficult.

Compression Functions: Building Blocks of Secure Hashing

The compression function is the iterative core of many hash algorithms, processing input data in blocks and condensing them into fixed-size hash values. This function takes two inputs—the current chaining value and the next data block—and outputs a new chaining value, accumulating the entire input’s influence securely and irreversibly. Compression functions are designed to satisfy strict security criteria:

- Collision resistance: Preventing two different inputs from producing the same output at any iteration.

- Pre-image resistance: Ensuring that it’s computationally infeasible to revert outputs back to original inputs.

- Avalanche effect: Maximizing sensitivity so that minor input changes propagate unpredictably through the computation.

By iterating the compression function over all input blocks, hashing algorithms extend their secure, fixed-size output over arbitrarily large datasets, making them versatile for a broad range of cryptographic applications.

Together, these mathematical foundations—modular arithmetic, bitwise operations, and carefully crafted compression functions—form the intricate machinery that powers modern hashing algorithms. Their interplay guarantees that hash functions remain efficient, reliable, and resistant to cryptanalytic attacks, maintaining trust across diverse fields such as data integrity verification, password security, and blockchain technologies.

Image courtesy of cottonbro studio

History and Evolution of Hashing Algorithms

The development of hashing algorithms reflects a continuous pursuit of stronger security and greater efficiency in cryptographic systems. Early hash functions emerged in the 1970s and 1980s alongside the rise of public-key cryptography and digital signatures, setting the foundation for modern data integrity tools. The MD (Message Digest) family, particularly MD5, became widely adopted in the 1990s due to its simplicity and relatively fast performance. Designed by Ronald Rivest, MD5 produces a 128-bit hash output and was extensively used for file verification and password hashing.

However, the discovery of critical vulnerabilities in MD5—such as collision attacks demonstrated in the early 2000s—highlighted the urgent need for more secure alternatives. This led to the widespread adoption of the SHA (Secure Hash Algorithm) family, developed by the NSA and standardized by NIST. The progression from SHA-1 to the more robust SHA-2 family (including SHA-256 and SHA-512) marked significant improvements in collision resistance and pre-image resistance, addressing known weaknesses while maintaining operational efficiency. SHA-1 itself was later deprecated after practical collision attacks surfaced, pushing cryptographers to advance standardization efforts further.

Looking beyond SHA-2, the introduction of SHA-3 in 2015, based on the Keccak algorithm, incorporated a novel sponge construction providing enhanced security assurances and resilience against emerging cryptanalytic techniques. Throughout this evolution, several key security incidents—ranging from collision exploits to pre-image breakthroughs—have directly shaped algorithmic designs, fostering innovations like increased output sizes, improved diffusion mechanisms, and resistance against length-extension attacks.

In summary, the history of hashing algorithms is characterized by:

- Initial adoption of hash functions focused on speed and simplicity (e.g., MD5).

- Exposure of vulnerabilities prompting stronger, standardized algorithms (e.g., SHA-1 deprecation).

- Development of more advanced designs with superior cryptographic strength (e.g., SHA-2 and SHA-3).

- Continuous adaptation driven by real-world cryptanalysis, culminating in ongoing efforts toward quantum-resistant hashing methods.

This evolutionary journey underscores how the dynamic landscape of cryptanalysis and security threats continuously influences the design and deployment of hashing algorithms critical for maintaining trust in digital communications and data protection.

Image courtesy of Worldspectrum

Detailed Mechanics of Popular Hashing Algorithms

To truly understand the security guarantees of cryptographic hash functions, it is essential to delve into the internal workings of widely used algorithms like SHA-1, SHA-256, and SHA-3. Despite sharing the same fundamental goals of ensuring data integrity and collision resistance, these algorithms employ distinct compression functions, message scheduling techniques, and iterative round transformations that define their strength and efficiency.

SHA-1: The Classic Iterative Compression

SHA-1 processes data in 512-bit blocks and produces a 160-bit hash output. Its core operation relies on:

- Message Schedule: The 512-bit input block is first divided into sixteen 32-bit words. These are expanded into 80 words using bitwise operations, primarily XORs and left rotations, to build a schedule that feeds the compression rounds.

- Compression Function: SHA-1 maintains five 32-bit chaining variables, updated through 80 rounds using boolean functions (AND, OR, XOR), modular addition (mod 2^32), and circular shifts.

- Rounds: Each round applies a different logical function and constant to mix the data thoroughly, designed to maximize diffusion and the avalanche effect.

While revolutionary at its introduction, SHA-1’s relatively small output size and structural vulnerabilities have made it susceptible to collision attacks, leading to its deprecation in security-critical contexts.

SHA-256: Enhanced Security with the SHA-2 Family

A member of the SHA-2 family, SHA-256 strengthens hashing security by increasing output length to 256 bits and employing a more complex message schedule and compression approach:

- Message Schedule: The 512-bit block is split into sixteen 32-bit words, expanded to 64 words using bitwise rotation, shifting, and XORs, markedly improving the mixing of input bits.

- Compression Function: It uses eight 32-bit working variables updated over 64 rounds. Each round performs modular additions combined with logical functions like Ch (choose), Maj (majority), and several custom Sigma functions involving bitwise rotations and shifts.

- Rounds: These rounds utilize a set of 64 constant 32-bit words derived from the fractional parts of the cube roots of the first 64 prime numbers — a design choice enhancing randomness and thwarting analytical attacks.

SHA-256’s design significantly boosts collision and pre-image resistance, making it the standard for secure hashing in blockchain technologies, digital signatures, and TLS/SSL certificates.

SHA-3: The Sponge Construction Innovator

Distinct from SHA-1 and SHA-2’s Merkle-Damgård construction, SHA-3 employs the Keccak sponge function, revolutionizing the hashing process:

- Sponge Function: SHA-3 absorbs input bits into a fixed-size state through repeated XORing, then "squeezes" out the final hash after a series of permutation rounds, handling arbitrary input lengths flexibly.

- State Size and Rate: The algorithm operates on a 1600-bit state divided into a rate portion for input/output and a capacity portion for security. This separation enhances resistance to length-extension and collision attacks.

- Permutation Rounds: Each round applies five sequential steps — Theta, Rho, Pi, Chi, and Iota — combining rotations, permutations, and nonlinear transformations to scramble the internal state thoroughly.

- Security Parameters: The capacity determines resilience against attacks, allowing SHA-3 variants with different digest sizes (e.g., SHA3-256, SHA3-512) to balance security and performance.

By adopting the sponge construction and innovative permutation-based design, SHA-3 provides robust security even against advanced cryptanalytic techniques and aligns better with emerging post-quantum security considerations.

Understanding these contrasting internal architectures—from SHA-1’s iterative compression to SHA-3’s sponge model—illuminates how cryptographic hashing algorithms have evolved to meet increasingly demanding security requirements. Their distinct message schedules, compression functions, and round operations are carefully crafted to optimize diffusion, collision resistance, and computational efficiency, ensuring trustworthiness in a broad range of cryptographic applications.

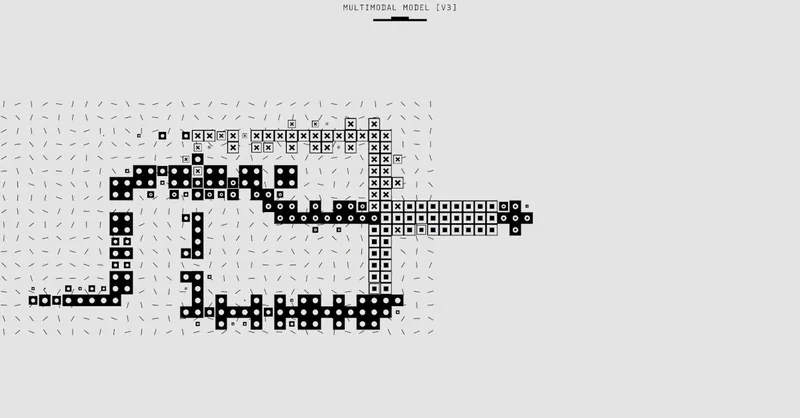

Image courtesy of Google DeepMind

Properties Ensuring Security: Collision Resistance, Pre-image Resistance, and Second Pre-image Resistance

The security strength of a cryptographic hashing algorithm hinges on three critical properties: collision resistance, pre-image resistance, and second pre-image resistance. These attributes prevent attackers from exploiting the hash function to compromise data integrity, authentication, or confidentiality. Each property plays a distinct role in fortifying cryptographic systems and underpins real-world applications such as digital signatures, password hashing, and blockchain integrity.

Collision Resistance: Avoiding Duplicate Hashes

Collision resistance means it is computationally infeasible to find two different inputs that produce the same hash output. If collisions were easy to find, an attacker could substitute legitimate data with malicious content bearing the same hash digest, undermining trust and security. For example, in digital signatures, collision resistance ensures that no two documents share the same signed hash, preserving authenticity.

Example:

Imagine a scenario where a malware author crafts a file that has the identical SHA-256 hash as a trusted software update. Without collision resistance, an unsuspecting system might accept the malicious file as genuine, leading to critical security breaches.

Pre-image Resistance: The One-Way Nature of Hashing

Pre-image resistance refers to the difficulty of reversing a hash output to discover its original input. Essentially, given a hash value ( h ), it should be computationally impossible to find any message ( m ) such that ( \text{hash}(m) = h ). This property is fundamental for password storage and cryptographic commitments, where revealing the original input would compromise sensitive information.

Example:

When passwords are hashed and stored, pre-image resistance ensures that even if an attacker obtains the hash, they cannot feasibly retrieve the original password. This protects user credentials against offline brute-force or dictionary attacks.

Second Pre-image Resistance: Protecting Against Targeted Forgery

Second pre-image resistance is the difficulty of finding a second input ( m_2 \neq m_1 ) that hashes to the same output as a given input ( m_1 ). Unlike collision resistance, which addresses any pair of colliding inputs, second pre-image resistance focuses on preventing an attacker from faking a particular known message to produce a duplicate hash, which is vital in scenarios where the original input is fixed or known.

Example:

Consider an attacker aiming to alter a signed contract ( m_1 ) without invalidating its signature. If they could find ( m_2 ) such that ( \text{hash}(m_1) = \text{hash}(m_2) ), the contract’s integrity would be compromised. Second pre-image resistance thwarts this by making it computationally impractical to discover such an alternate input.

These properties collectively ensure that hash functions behave as secure one-way functions with minimal likelihood of exploit through collisions or reversals. Their cryptographic strength is directly tied to the algorithm’s underlying design—larger output sizes and complex internal operations enhance resistance against these attack vectors. Understanding and verifying these properties helps security professionals select and implement hashing algorithms that maintain robust defenses against evolving cryptanalytic threats.

Image courtesy of Antoni Shkraba Studio

Common Attacks on Hash Functions and Their Mitigations

While cryptographic hash functions are designed to provide strong security guarantees, their practical deployment often faces sophisticated attacks that exploit underlying vulnerabilities. Understanding these common attacks on hash functions—including collision attacks, length extension attacks, and others—is crucial for evaluating algorithm robustness and implementing effective mitigations to maintain data integrity and trustworthiness.

Collision Attacks: When Two Inputs Share the Same Hash

A collision attack attempts to find two distinct inputs ( m_1 \neq m_2 ) such that their hash outputs are identical, i.e., ( \text{hash}(m_1) = \text{hash}(m_2) ). Collisions undermine the fundamental premise of hash uniqueness and can facilitate forgery, data tampering, or impersonation in digital signatures and certificates.

- Birthday attacks leverage the birthday paradox to reduce collision search complexity from ( 2^n ) to approximately ( 2^{n/2} ) for an ( n )-bit hash output, significantly accelerating collision discovery.

- Real-world collisions have been demonstrated against older algorithms such as MD5 and SHA-1, exposing vulnerabilities that rendered them unsuitable for secure applications.

Mitigations:

- Use longer hash outputs: Algorithms with larger digest sizes like SHA-256 or SHA-3 (256 bits or more) exponentially increase collision resistance.

- Adopt hash functions with proven security: Transitioning from compromised algorithms (MD5, SHA-1) to robust standards like SHA-2 and SHA-3 is essential.

- Apply domain separation and salting: Including unique, unpredictable data in the input can prevent attackers from preparing collision pairs in advance.

Length Extension Attacks: Exploiting Iterative Structures

Length extension attacks target hash functions built on the Merkle-Damgård construction (e.g., MD5, SHA-1, SHA-256), where the hash state after processing a message can be used to compute the hash of an extended message without knowing the original input.

- This vulnerability enables an attacker to forge valid hashes for expanded messages by appending data, leading to signature forgery or message integrity bypasses.

- For instance, if an application hashes a secret key concatenated with a message ( k | m ), an attacker can exploit length extension to compute ( \text{hash}(k | m | m') ) without the key.

Mitigations:

- Use hash constructions resistant to length extension: Sponge-based hashes like SHA-3 inherently mitigate this vulnerability by design.

- Employ HMAC (Hash-based Message Authentication Codes): Utilizing HMAC incorporates the key both before and after the message in a way that thwarts length extension.

- Avoid naive concatenations of keys and messages: Proper cryptographic protocols prevent misuse that could expose length extension weaknesses.

Other Attack Vectors and Defenses

- Pre-image and second pre-image attacks: Though computationally intensive, advances in cryptanalysis necessitate using hashes with sufficient output lengths and complexity to maintain security margins.

- Side-channel attacks: Implementations must guard against timing, power analysis, and fault injection attacks that leak intermediate hash computations.

- Quantum attacks: Emerging quantum algorithms like Grover’s algorithm marginally reduce pre-image resistance; countermeasures include doubling hash sizes or adopting quantum-resistant designs.

By recognizing and mitigating these common hash function attacks, cryptography practitioners ensure the durability and trustworthiness of digital security systems. Modern algorithms embrace architectural improvements, strategic use of salts and keys, and new hash constructions to defend against evolving cryptanalytic threats, preserving the integrity and authenticity of sensitive digital communications.

Image courtesy of Antoni Shkraba Studio

Hashing in Digital Signatures and Message Authentication Codes (MACs)

Hashing algorithms are fundamental to securing communications, particularly through their critical roles in digital signatures and Message Authentication Codes (MACs). These applications leverage the unique properties of cryptographic hash functions to provide data integrity, authentication, and non-repudiation, ensuring that messages have not been tampered with and originate from verified sources.

Digital Signatures: Verifying Authenticity with Hashes

In digital signatures, a sender uses a hashing algorithm to create a fixed-size digest of a message, which is then encrypted using their private key. This digest acts as a unique fingerprint of the original message:

- The recipient decrypts the signature with the sender’s public key and compares the resulting hash with a freshly computed hash of the received message.

- If the hashes match, it proves the message has not been altered and confirms the sender’s identity.

- Due to collision resistance and pre-image resistance, forging a valid signature without access to the private key is computationally infeasible.

By hashing before encryption, digital signatures remain efficient—even for large datasets—while retaining robust security. This approach underpins many secure protocols such as TLS/SSL certificates, code signing, and blockchain transactions, where trust and data integrity are paramount.

HMAC: Strengthening Message Authentication with Hashes and Keys

The Hash-based Message Authentication Code (HMAC) is a widely adopted construction that combines a secret cryptographic key with a hashing algorithm to provide both data integrity and authentication. Unlike plain hashes, HMACs prevent attackers from generating valid authentication codes without knowledge of the secret key, even if the hash function used is publicly known.

HMAC operates as follows:

- The secret key is used to generate two distinct keys by padding and hashing processes.

- The message is hashed with the first key, and then the result is hashed again with the second key.

- The final output functions as a secure MAC, allowing recipients with the same secret key to verify message authenticity.

HMAC inherits the cryptographic strengths of the underlying hash function—such as SHA-256 or SHA-3—but is inherently resistant to length extension attacks and collision vulnerabilities. This makes HMAC the standard choice for securing APIs, VPN connections, and other protocols requiring strong message authentication.

Other Protocols Leveraging Hashes for Security

Beyond digital signatures and HMACs, hashing algorithms are integral to protocols like:

- TLS/SSL Handshake: Hashes verify the integrity of exchanged keys and certificates.

- IPsec: Uses HMAC for authenticating IP packets.

- Password Authentication Protocols: Such as SCRAM, which combine salted hashes with keyed operations for secure login mechanisms.

These uses highlight how hashing solidifies the architecture of secure communications by delivering reliable integrity checks and authentication guarantees indispensable for protecting data in transit.

By exploiting the deterministic yet irreversible nature of cryptographic hash functions, digital signatures and MACs form the cornerstone of modern secure communication protocols. Their incorporation ensures that messages cannot be maliciously altered or forged, thereby maintaining confidentiality, trust, and accountability across digital networks worldwide.

Image courtesy of Tima Miroshnichenko

Impact of Quantum Computing on Hashing Algorithms

The advent of quantum computing presents profound implications for the security of cryptographic hash functions, challenging long-standing assumptions of computational hardness that underpin their design. Among quantum attacks, Grover’s algorithm notably threatens the pre-image resistance of hash functions by offering a quadratic speedup in searching for inputs that map to a given hash output. While classical brute-force attacks require on the order of ( 2^n ) operations for an ( n )-bit hash, Grover’s algorithm reduces this complexity to approximately ( 2^{n/2} ), effectively halving the effective security level.

Effects of Grover’s Algorithm on Hash Security

- Reduced Pre-image Resistance: Traditional hash sizes, such as 128-bit or 256-bit outputs, no longer guarantee the same level of pre-image resistance against quantum adversaries. For example, a 256-bit hash under Grover’s algorithm offers security akin to a 128-bit classical hash, which may be insufficient against future quantum-capable attackers.

- Collision Resistance Remains Largely Unaffected: Unlike pre-image resistance, collision resistance suffers less from quantum acceleration. The best-known quantum algorithms yield only a marginal improvement over classical birthday attacks, maintaining the approximate ( 2^{n/3} ) effort required.

- Necessity to Increase Hash Lengths: To counteract the quadratic quantum speedup, cryptographers recommend doubling hash output lengths—SHA-512 or even larger—when quantum threats must be considered to maintain equivalent security margins.

Ongoing Research into Quantum-Resistant Hash Functions

In response to the emerging quantum threat landscape, research is accelerating toward quantum-resistant hashing algorithms, which strive to retain strong security properties even in the presence of quantum adversaries. Key directions include:

- Designing Hashes with Larger Output Sizes: Increasing digest sizes is a straightforward mitigation aligned with Grover’s algorithm limitations.

- Exploring New Constructions Beyond Merkle-Damgård and Sponge Models: Alternate approaches that inherently complicate quantum search processes or reduce attack surfaces are under active study.

- Developing Post-Quantum Cryptographic Standards: Organizations like NIST are spearheading efforts to standardize cryptographic primitives—including hash functions—that withstand quantum computation while balancing performance and implementation constraints.

- Hybrid Cryptographic Schemes: Combining classical and quantum-secure elements offers interim protection during the transition to quantum-safe systems.

While quantum computing’s full-scale practical impact remains on the horizon, understanding its potential to erode the security guarantees of existing hashing algorithms is critical. Security architects and cryptographers must proactively adapt by adopting longer hashes, applying quantum-resistant algorithms as they mature, and integrating post-quantum strategies to future-proof data integrity, authentication, and confidential communications against the quantum era’s challenges.

Image courtesy of Google DeepMind

Practical Use Cases: Password Hashing, Data Integrity Verification, and Blockchain

Hashing algorithms are integral to numerous real-world security applications, where their cryptographic properties ensure data remains confidential, authentic, and tamper-evident. Understanding how hashing is applied in practical scenarios highlights its critical role across cybersecurity, software development, and emerging technologies like blockchain.

Password Hashing: Safeguarding User Credentials

One of the most widespread uses of hashing is in password storage. Rather than storing plaintext passwords—which poses catastrophic risks if databases are compromised—systems store hashed versions of passwords. This process leverages the one-way nature of cryptographic hash functions, ensuring that even if attackers access the stored hashes, reversing them into original passwords is computationally infeasible.

To enhance security, best practices for password hashing include:

- Use of Salt: A unique, random value added to each password before hashing to prevent precomputed hash attacks (rainbow tables) and ensure identical passwords have distinct hashes.

- Slow Hash Functions: Employing specialized hashing algorithms designed for passwords, such as bcrypt, scrypt, or Argon2, that deliberately slow down hashing to impede brute-force attacks.

- Multiple Iterations (Key Stretching): Repeatedly applying the hash function makes guessing attacks more computationally expensive.

These techniques collectively make password hashes resilient against offline attacks, limiting damage even if hashes are leaked. Strong password hashing strategies are a cornerstone of user authentication security in web applications, operating systems, and identity management systems.

Data Integrity Verification: Ensuring Authenticity and Tamper Detection

Hash functions play a fundamental role in verifying data integrity by generating fixed-size digests that serve as fingerprints for files, messages, or software. When data is transferred over networks or stored long-term, hashing allows recipients or systems to validate that content remains unaltered.

Common data integrity use cases include:

- File Checksums: Tools generate hash digests (e.g., SHA-256) to verify downloads or backups, detecting corruption or tampering.

- Digital Signatures: Hashes compress message data before signing, allowing efficient and secure verification.

- Version Control Systems: Hashes uniquely identify file versions, ensuring consistency across distributed repositories.

Best practices to maintain strong integrity checks emphasize the use of collision-resistant hashes and secure key management in signed contexts to prevent forgery or replay attacks.

Blockchain: Trustless Consensus and Immutable Ledgers

Perhaps one of the most transformative applications of hashing is in blockchain technology, where it underpins the security, immutability, and consensus mechanisms of distributed ledgers. Hash functions are crucial to:

- Linking Blocks: Each block contains the hash of the previous block, forming a chain that quickly exposes tampering, since altering one block changes subsequent hashes.

- Proof of Work (PoW): Miners compute hashes under certain difficulty constraints, ensuring work was performed to validate transactions.

- Transaction Integrity: Hashes uniquely identify transactions and blocks, enabling efficient lookup and verification.

- Merkle Trees: Hash-based data structures aggregate and verify large volumes of transactions efficiently and securely.

For secure blockchain implementations, it is critical to use robust, collision-resistant hashing algorithms—such as SHA-256 in Bitcoin or Keccak variants in Ethereum—to prevent attacks that could compromise ledger consistency. Additionally, maintaining cryptographic agility to upgrade hashing standards as quantum threats evolve remains a best practice for blockchain resilience.

By bridging the theoretical mechanics of hash functions with these practical applications, it is clear that secure, well-implemented hashing algorithms are indispensable in protecting passwords, ensuring data integrity, and enabling the trustless innovation of blockchain systems. Adherence to recommended practices—like salting passwords, choosing appropriate hash functions, and understanding domain-specific risks—maximizes security and reliability across diverse technological landscapes.

Image courtesy of Antoni Shkraba Studio

Future Trends and Emerging Research in Hashing Technologies

As the digital ecosystem rapidly expands with interconnected devices and evolving threats, hashing technologies continue to innovate to meet demands for greater efficiency, security, and adaptability. Among the most significant future trends is the development of lightweight hashing algorithms tailored for the Internet of Things (IoT) and constrained environments, which require fast, secure, and resource-efficient hash functions to ensure data integrity and authentication without exhausting limited computational resources.

Lightweight Hashing for IoT and Embedded Systems

IoT devices, sensors, and embedded systems often operate under strict limitations of power, memory, and processing capability. Traditional heavyweight hash functions like SHA-256 may be too resource-intensive for these platforms. Consequently, research focuses on designing lightweight hash functions that:

- Maintain critical cryptographic properties such as collision resistance and pre-image resistance.

- Minimize computational overhead and memory footprint.

- Are compatible with low-power hardware architectures, enabling real-time secure communications.

Examples include algorithms like SPONGENT, PHOTON, and LESAMNTA, which leverage streamlined sponge constructions and optimized bitwise operations to balance performance and security. These innovations are essential to secure IoT deployments ranging from smart homes to industrial control systems.

Advancements in Cryptographic Standards and Algorithms

Standardization bodies such as NIST and ISO/IEC actively drive the progress of hashing technologies through ongoing evaluations and updates to cryptographic standards. Recent efforts include:

- Post-quantum cryptography standardization: Integration of hash-based cryptographic schemes like XMSS (eXtended Merkle Signature Scheme) and LMS (Leighton-Micali Signature), which use hash functions for secure, quantum-resistant digital signatures.

- Enhancements to existing standards that incorporate domain separation techniques, salting mechanisms, and resistance to emerging attack vectors including side-channel and fault injection attacks.

- Development of configurable hash functions adaptable to varying security-performance trade-offs to address diverse application needs.

These initiatives ensure that hashing algorithms evolve to withstand new cryptanalytic methods and align with futuristic security requirements.

Evolving Recommendations by Standards Bodies

In response to both classical and quantum threats, standards bodies recommend:

- Adopting larger digest sizes (e.g., SHA-512, SHA3-512) to maintain security margins against quantum attacks.

- Phasing out older algorithms vulnerable to collisions or length-extension attacks, urging migration toward SHA-3 or post-quantum hash-based constructions.

- Emphasizing cryptographic agility, allowing systems to switch hash algorithms without major architectural overhaul as newer, more secure hashes become available.

- Encouraging lightweight and hardware-friendly algorithm adoption for emerging domains like IoT, automotive security, and embedded cryptosystems.

Collectively, these future trends and research directions highlight an active, dynamic landscape in hashing technologies. They underscore an ongoing commitment to strengthening cryptographic hash functions against evolving threats while optimizing their suitability for a broad spectrum of environments—from cloud servers to edge devices—ensuring the continued reliability of secure communications and data protection in an increasingly interconnected world.

Image courtesy of cottonbro studio